The GPT Suicides and the Delusion of Alignment

Plus: Sale on Book Preorders; The Joe Rogan Scam; Democratic Dark Money; and more.

Welcome to your weekly Dark Markets technology fraud roundup, pushed back one day for Labor Day. Don’t forget to catch up on our weekend deep dive into Bullish, the recent IPO hit with an incredibly sketchy backstory.

Stealing the Future Preorder Sale

From today until September 5th, Barnes and Noble is running a sale on all book pre-orders - including mine. Save 25% on Stealing the Future: Sam Bankman-Fried, Elite Fraud, and the Cult of Techno-Utopia at BN.com with promo code PREORDER25.

It’s a great chance to get a great deal without giving Amazon a cut.

The ChatGPT Suicides: LLM Alignment is a Hallucination

The parents of 16 year old Adam Raine have filed a wrongful death lawsuit against OpenAI, after ChatGPT advised the teenager on planning his own suicide over several months, and even helped him write a suicide note.

“He would be here but for ChatGPT. I 100% believe that,” said Adam’s father Matt.

This follows similar incidents, including a former Yahoo! executive, Erik Soelberg, who seemingly killed his 83 year old mother and then took his own life after months of heavy ChatGPT usage (and seeming dwindling life prospects). Cases of ChatGPT-related delusion and schizophrenic breaks are seemingly rising.

I don’t think ChatGPT in these cases is in itself causing suicidal urges or mental instability, but it is clearly playing a role in exacerbating them. The underlying situations in these cases, with victims of all ages, prominently include isolation: Adam Raine was “using the artificial intelligence chatbot as a substitute for human companionship.” Some of the delusional cases show rampant paranoia.

This isolation, as much as any specific content created by ChatGPT, needs to be considered part of the package being sold by AI companies. From robot therapists to simulated love to always-on friends, the market isn’t stopping at trying to replace people at work, but angling to monetize all of your social needs.

That might or might not prove a workable business model, but it will be a catastrophic development for the human race.

OpenAI’s response has of course been laughably superficial: they will add new guidelines “that fit a teen’s unique stage of development,” a move which only highlights the fundamental fraud of LLM-based tools being advertised as “intelligence.” From training to these post-facto tweaks, they are simply weighted probability trees, trained on human inputs and with output massaged by humans at the other end, too.

LLMs have no ability to incorporate general principles or values, because they do not possess comprehension, much less consciousness. This makes Eliezer Yudkowsky’s sad quest to create “aligned” artificial intelligences, machines that share human values, even more specifically nonsensical than it was in its basic conception.

LLMs do not understand what a human being is, much less “a teen’s unique stage of development.” Attempts to filter or control their behavior will be equally hamfisted - expect any discussion of suicide to be simply blocked in future versions of the tool. Because there can be no nuance in how an LLM’s logic is shaped by outside guidelines, simply banning large chunks of content is the only way forward, meaning public-facing LLMs will only get more and more anemic as more and more high-risk topics are simply carved out with all the subtlety of doing brain surgery with a melon baller.

That’s because LLMs are not intelligences. They’re just more Mechanical Turks, and using them to assuage a sense of existential despair is no more effective than playing World of Warcraft and pretending you’ve had an actual adventure.

They are nightmarish simulacra of humanity that will leave you emptier inside – even if it takes you years to notice.

More Democratic Dark Money

A new Wired article by Taylor Lorenz puts a grim spin on the work of Chorus, a media incubator funded by the Democratic Party. Chorus pays a reported $8,000 a month to political content creators like David Pakman, seemingly above all to help boost pro-Israel voices.

The Joe Rogan “Comedy” Scam is a Tech Bubble

This video essay from Elephant Graveyard has garnered a ton of attention over the past wee. It’s a devastating takedown of the insular and authoritarian nature of Joe Rogan’s “Comedy Mothership.” It devastatingly skewers Rogan and his flunkies as having huffed enough of their own farts that if they were ever actually funny, they’ve lost sight of land in the fog of money and power. Most devastatingly, it highlights numerous direct statements from members of the clique about how futile it is to go it alone as a creative - all of these types are ultimately frightened conformists.

More to the point for readers of this newsletter, the essay focuses a lot of attention on both Rogan’s direct relationships with tech-right figures like Peter Thiel, and has a good read of the way algorithmic digital culture benefits creators attuned to the lowest common denominator.

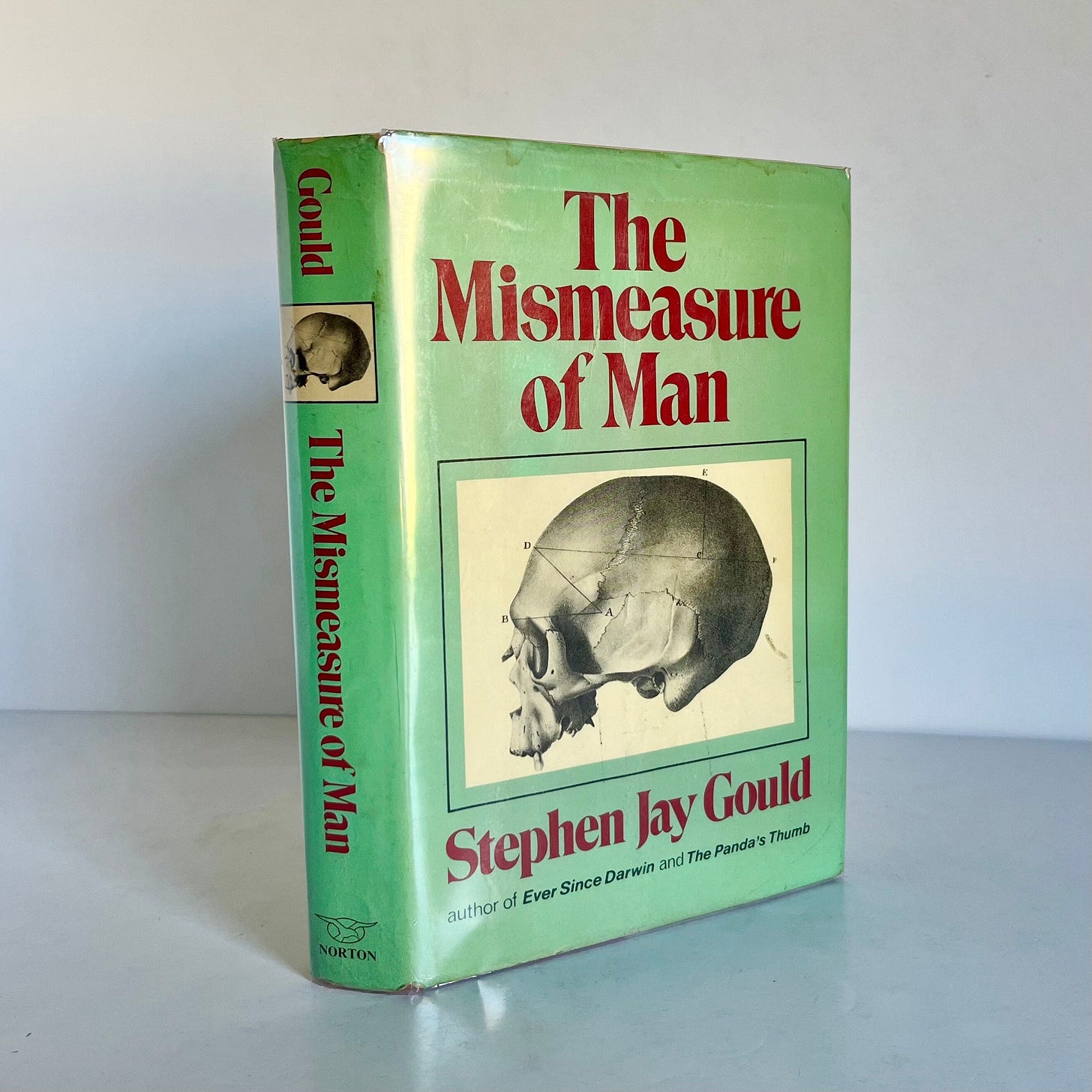

Book: The Mismeasure of Man, by Stephen Jay Gould

One of the most shocking developments of the Trump era has been the naked re-emergence of human biological essentialism in politics - the belief that racial and ethnic groups have inherently different capabilities, particularly differing intelligences. It’s a concept championed in books like Charles Murray and Richard Herrnstein’s The Bell Curve, first published in 1994, and recently re-inserted into popular consciousness by techno-rightoids like Lex Fridman.

The original publication of The Bell Curve triggered eminent evolutionary biologist Stephen Jay Gould to update The Mismeasure of Man, a rebuttal of Murray’s racist and scientifically bankrupt ideas - which was originally published in 1981. As Gould unflinchingly points out, he was able to rebut Murray in advance because ideas of racial hierarchy and unitary intelligence tend to resurface in America every time politics gets mean and stingy, because the real motive for the ideas is to justify social inequality.

But leaving the metapolitics aside, The Mismeasure of Man simply annihilates the supposed science behind racial essentialism. This goes to the level of detailing statistical and data errors by the early evolutionary psychologists, such as their tendency to draw arbitrary lines around “racial” groups so that their scientific results matched their prejudices.

Even more fundamentally, Gould rips the very concept of a unitary, ranked intelligence to shreds. The concept of hierarchies of intelligence is embedded most clearly in devotion to “IQ,” a fundamentally meaningless metric that is almost exclusively a tool of social control - and which was, notably, a significant part of the logic of Effective Altruism.

It’s just one of the more subtle signs of the racism deeply embedded in EA’s soul, and should have been a major warning sign to FTX investors - scientific racism, ironically, is a huge red flag for stupidity and incompetence.

Isolation is always the problem. It's the same as when Facebook started going heavy into machine learning, long before their recent, odious chat bots that do not reveal themselves as non-human. It was driving people to fringe groups, taking them out of human interaction, radicalizing them. A lot of it was fake too, it might have been created by a human, but still fake. Some human in some other country, possibly chained to a computer, doing that between pig butchering scams. Because the motive was profit (or election interference, for later profit) the result was similarly bad--just couldn't be scaled as much because even with scripts and some automation there was still quite a bit of real human needed and Facebook's manipulation algorithms were only evil henchman level, not yet demonic eldritch level.

The problem is very simple. We have evolved to be social. Because all humans evolved generally the same, together, this desire to be social has worked out pretty well. Because mostly you would be interacting with others who wanted to collaborate in a non-zero-sum way, benefit from helping each other. Tech evolution is obviously faster than natural evolution. And AI is controlled by corporations who are not interested in collaboration in a non-zero-sum way. They are interested in exploitation. They are the sociopaths that do exist in humans, but are a minority, such a minority that they don't dissuade humans from being social. Suddenly, they can be everywhere and you can be forced to interact with them and your brain, which is evolved to interact with humans that are generally not out to be parasites, is easy pickings! Yay!

"

That’s because LLMs are not intelligences.

"

Maybe. But I think the problem isn't so much with the limitations of LLMs, but the fact they are developed and run for profit. And were it not for that profit motivation, some of the most problematic "bugs" might not exist.

I read recently the reason why AI hallucinates is that there was a positive weighting for an answer, any answer, but there is no positive weighting for "I don't know." Why is that? Maybe it is to make people engage with it more. Some might defend this choice as necessary to get it better, if people dismiss the product, they'll never use it enough to give feedback necessary to improve the product. But I don't believe that it's just to improve the product. I think they could have it "guess" and say it's a guess, and people could tune and get it better that way. I think they could have made it so responses were either "I'm not 100% sure, I have no citation for this, but ... I'm about 10% confident because of this inference based on [this] data I have" or "I'm 100% confident on this, here's a quote from the New England Journal of Medicine ' ... ', page 25 of [this] study, third paragraph." Basically, show their work. I bet they could have made it able to do that.

But they probably don't want to show sources because a lot of those sources are probably copy-written, and they are trying to sell a service. So regardless if it is more engaging or less engaging to hallucinate, revealing their sources as copy-written might get lead them to being forced to stop their services. Which clearly would mean no engagement. Either way, I think it's just the typical drug-of-choice for silicon valley. Growth. They will sell their souls and their first born children for growth statistics to sell to VCs pre-IPO and pleebs post-IPO. So they built it to be dishonest. And, apparently, deadly.