Apple's LLM Debunking has the AGI Faithful Sweating

Why skepticism is a superpower for the best tech company in the world.

We’re Back! After finishing a complete draft of the book, I took the first real vacation I’ve had in about two years - and of course, missed a massive news week. I’ll be getting caught up on some of that - though I can’t resist highlighting that Curtis Yarvin has revealed he had some Zoom dates with Caroline Ellison, further cementing the idea that EA is deeply entwined with racist eugenics.

Today, though, I’m focusing on The Illusion of Thinking, a big new Apple Research Paper debunking LLM Reasoning. Scroll on for that.

We took a low-budget jaunt to the Shenandoah National Park and the surrounding area. Shenandoah, as part of the low-top Blue Ridge Mountains, has a lot of very approachable and incredibly beautiful hikes. I highly recommend the Skyland cabins, it’s a great compromise if you’re not quite up for tent camping.

I’m a big hiker. Simply being in nature is a huge physical and mental rejuvenator, and tapping humanity’s gift for uninterrupted long-distance walking connects you to pure species-being. The payoff is an incredible, lasting sense of calm - though living in New York City, especially without a car, makes hiking hard, so I treasure every chance I get to hike.

One element of the outdoors relevant to my interests here is *complexity.* Because they are trained on pre-existing data, no image LLM/GenAI can ever match the visual richness of … well, of any patch of reality, but especially not raw nature. This means consumption of generated images will inescapably degrade your cognitive abilities, relative to exposure to real plants and trees.

This is a proposition that may form a pillar of my next book, already taking shape …

The Apple LLM Paper and the AI Faithful

I understand AI relatively well on a technical level, but what really interests me is the way humans respond to it.

Way back in early 2023 I predicted that misrepresentations of AI as “thinking” and even “conscious” would be key to selling the public on its supposedly vast powers. I’m a very small fish in this debate compared to people like Gary Marcus and Grady Booch, actual computer scientists who have done their level best to try and pull this deceptive framing up from the roots - and been largely ignored.

Now there’s a skeptical voice the AGI Faithful are having a harder time ignoring - Apple.

In a new research paper, researchers from the most successful technology company of the past three decades conclude that any sense that Large Language Models are “thinking” is, and I quote, an “illusion.” The paper doesn’t just tackle pure “autofill” LLMs like early versions of ChatGPT, though, but also covers so-called “Large Reasoning Models” which add features like “Chain of Thought” (CoT). Those models have been touted as the next generation of LLM-based AIs, but Apple’s findings here ultimately conclude that LRMs are no better at reasoning beyond their training data than standard LLMs.

And this is not some theoretical argument about the nature of thought, but an analysis of the process and its visible outcomes. One example focused on in the paper is the so-called “Tower of Hanoi” puzzle, which requires moving rings along a series of pegs. You’re probably familiar with it.

The Tower of Hanoi is very easily solved by a tailored algorithm, because the solution to any size of Hanoi puzzle is a single recognizable and repeated procedure. Once a human figures out that method (and if they have the patience and precision) they can solve any size Hanoi.

But LRMs cannot consistently solve this straightforward reasoning problem, because they do not understand it (or anything). Apple’s researchers found that Claude Sonnet solved a 7-disc Hanoi with only 80% accuracy, and couldn’t tackle 8 discs at all.

More strikingly, these models don’t even understand how to apply a solution they have been given outright. As Gary Marcus discusses with one of the researchers, in one of the experiments in the paper, “we give the solution algorithm to the model, and all it has to do is follow the steps. Yet, this is not helping their performance at all.”

All of this matters for practical reasons, obviously, because it shows that continued investment in larger data sets and more compute won’t make these tools into general-purpose reasoners usable for high-stakes decisionmaking. More philosophically, these findings matter because they show that scaling compute will never turn LLMs into Artificial General Intelligence, a.k.a. The God AI, a.k.a. The Singularity, which has been pushed aggressively by entities like OpenAI and (less loudly) Anthropic.

The premise that LLMs can scale into AGI is:

Motivated by the economic logic of the AI timelines scam, which attracts more funding by promising AGI sooner. Compute scaling was the easy (and wrong) answer to how we get from ChatGPT to Building God.

Inextricable from both the “AI Doom” narrative and the linear/measurable model of the universe and knowledge advanced by the Rationalists, who perversely fueled the current AI boom through promulgating fear of an all-powerful god-like machine intelligence.

As early as last november, Microsoft CEO Satya Nadella was already pointing out that compute “scaling laws” aren’t laws at all, but observations. That’s beginning to become clear for Moore’s law of processor scaling, and now Apple has found the limits of a pure-scaling approach to data processing, even if you bolt on memory and chain-of-reasoning.

Marcus points out that one of the main inherent *advantages* of LLMs over humans is memory - LLMs have a lot more of it. But as Chomba Bumpe (another ML dev and very smart critic of AGI hype) highlights, Apple found that LRM models don’t even fill their alloted memory. The process of inferential reasoning breaks down well before memory limits are reached.

The Child is the Father To the Man

Welcome again to our continuing flood of new subscribers. This is your semi-weekly draft excerpt of “Stealing the Future: Sam Bankman-Fried, Elite Fraud, and the Cult of Techno-Utopia,” coming this October from Repeater Books. Paying Dark Markets supporters have full access to dozens of these excerpts, along with less formal commentaries on Bankman-Fried, his criminal trial,

Skepticism is a Superpower

The LLM defenders and AGI hopeful have of course circled the wagons to assert that this paper isn’t all its cracked up to be. One very revealing criticism from a mid-sized AI-booster account is that the paper was “written by an intern”, which shows a staggering but unsurprising lack of exposure to how actual research works. The paper’s lead author, Parshin Shojaee, is a computer science PhD candidate at Virginia Tech doing a research stint at Apple, not someone making coffee runs. Moreover, her coauthors include full-time machine learning engineers at Apple.

Just one example of how AI credulity is based on a profound lack of exposure to actual technology development, CS, and above all, information theory.

Another, more interesting set of objections has revolved around the idea that the paper is just Apple covering its ass because it’s “behind” on AI. This idea seems to be hugely seductive - this tweet got 34,000 likes:

It’s bullshit on several levels, but the most important one is that Apple has consciously and strategically been conservative on AI, and especially on the generative AI/LLMs that are at issue here. Apple has actually integrated a ton of (non-LLM) machine learning/AI into things like image recognition, but revealingly, it doesn’t get credit for it because it actually works. As in, doesn’t create weird mistakes.

This was what Tim Cook meant in October of last year when he said that Apple would be “not first, but best” on AI - as it has been with many other products, including the iPhone itself, which was revolutionary but still derived from primordial smartphones like the BlackBerry.

And here’s the thing - Apple has saved meaningful money by not trying to keep up with the likes of OpenAI. According to this report, Apple’s caution on AI came substantially not from Tim Cook, but CFO Luca Maestri, who scaled back Cook’s investment plans in 2023.

There are specific business reasons Apple was wise to be cautious with AI - it’s a hardware business, so it has to make sure AI enhances its core products. But more generally - and in specific contradiction to the Thielian neo-monopolist “zero to one” ethos - not dumping truckloads of cash into ideas with uncertain outcomes tends to be good for the long-term growth of an established business.

That doesn’t apply, of course, to startups like Anthropic and OpenAI, who have promised to deliver one kind of product and are making the right business decision by investing heavily in the model of improvement they have available. That this scaling-based model is producing rapidly diminishing returns is of course an existential problem, because it means they can’t actually deliver what they’ve spent years promising investors, but it’s perfectly logical for them to continue treading water until someone comes up with a new idea for how to improve these models.

But compare Apple to another established player: Mark Zuckerberg’s Meta. Over the past seven years, Meta has become a dog that chases every shiny new car that drives by. This started in 2018, when the then-Facebook launched the Libra stablecoin project, a clumsily conceived and catastrophically executed attempt to chase crypto and blockchain hype. Then Zuck notoriously went all-in on shared virtual reality, spending billions of dollars on “Reality Labs” and renaming the entire company after a project that is still losing $4 billion dollars per quarter.

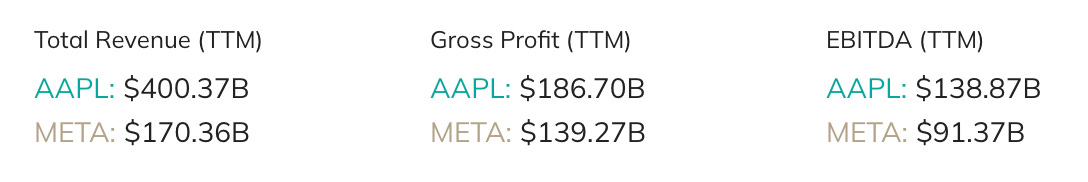

Here are the results of this all-in adventurism:

It’s not an apples-to-apples comparison - but only because Apple is also twice as big as Meta, despite their modern growth trajectories starting at roughly the same time.

That’s the compounding effect of making evidence-based decisions over time, instead of chasing hype to temporarily juice your stock price.

Thoroughly enjoyed your discussion of the Apple paper and contretemps.

Also you reminded me of what a national treasure Shenandoah National Park is.

https://bsky.app/profile/weusour.bsky.social/post/3lrbdkm5tak2a

"

This means consumption of generated images will inescapably degrade your cognitive abilities, relative to exposure to real plants and trees.

"

Walden for the AI age.

--

Every article/study like this on tech heavy platforms has people saying "they buried the lede," "yes this is fascinating but not for the erroneous mainstream takeaway," "the study had these clear flaws," "no one's talking about this, which is actually proved and fascinating in this study, but yes the other part is completely wrong."

https://news.ycombinator.com/item?id=44203562

Ycombinator, of course, has a lot Kool-AId drinkers, but some of these points are interesting.